The definitive guide to effective online surveys

Surveys have been employed as a way to gather information about people’s behaviors and preferences. Businesses, academia, and the government use them to guide policy decisions, develop new products, or improve service offerings in the form of opinion polls, to test ads, or track key performance indicators (KPI).

What makes surveys so valuable is our ability to generalize from the data that we capture. That is, when we recruit a sample of people to take our survey, we can use that data to make inferences about the population we are interested in. If, for example, you select a truly random selection of 250 millennials to take your survey, this can be sufficient for understanding the broader millennial population.

However, insights professionals face challenges recruiting individuals to take surveys. Whether it be social media, tv ads, promotions or even junk email, consumers are inundated with a constant barrage of marketing messages and insight professionals compete for their attention. This has become particularly pronounced in the last decade, where survey participation rates have been steadily declining with fewer people willing to take them (1).

Declining response rates are obviously a cause for concern: the more difficult it is to recruit people to take a survey, the more time, energy and cost involved. Non-response error becomes an issue with diminishing response rates.

Non-response error occurs when the people that do take surveys differ from the people that refuse. This can bias the survey data and give the researcher an incomplete picture of the population. Similar to the silent majority – we may not hear from a segment of our customer base, but their opinions do matter to us.

How can we address this problem and improve response rates to our surveys? In this eBook, we’ll discuss a handful of techniques that can be used to help engage and encourage survey participation. We’ll address the email invite, reminders, incentives, and survey design. While by no means an exhaustive list, these are simple and effective techniques that should be a part of every survey-based research project in order to get the most out of your research so that you can achieve the insights you seek.

Email invite

The email invite is the first point of contact with the respondent, so this is a crucial area for recruiting. Nothing improves response rates more than when an email recipient recognizes and trusts the name of the sender. People are more likely to take a survey from someone they know or have an existing relationship with. An email recipient will, for instance, pay closer attention to items in their inbox that come from highly recognized brands – as these brands have a reputation of trust. Survey sponsors with ‘high authority’ (e.g government or academic institutions) also achieve relatively high online survey response rates for the same reason. Consumers know and trust these institutions are not serving up spam and likely have valid reasons for asking you to take their online survey. Although you cannot control who the online survey sponsor is, you can take care to draw attention to the survey sponsor and/or to your relationship with the respondent when it is advantageous to do so. One of the easiest ways to do this is through the survey invite. Online survey invites should be carefully constructed, personalized, and if possible, branded. Corporate logos, professional stylings or even personable language can be used to establish a connection between the recipient and drive traffic to your survey. Since the invite is the first point of contact with your respondent, it is the gateway to your survey. Done well, it can show the recipient that you value their time. Conversely, a sloppy invite conveys the message that you don’t care, so why should they?

Here are three examples, the first showing a poorly designed invite, the second how to strive for authenticity, and the third on being personal and concise.

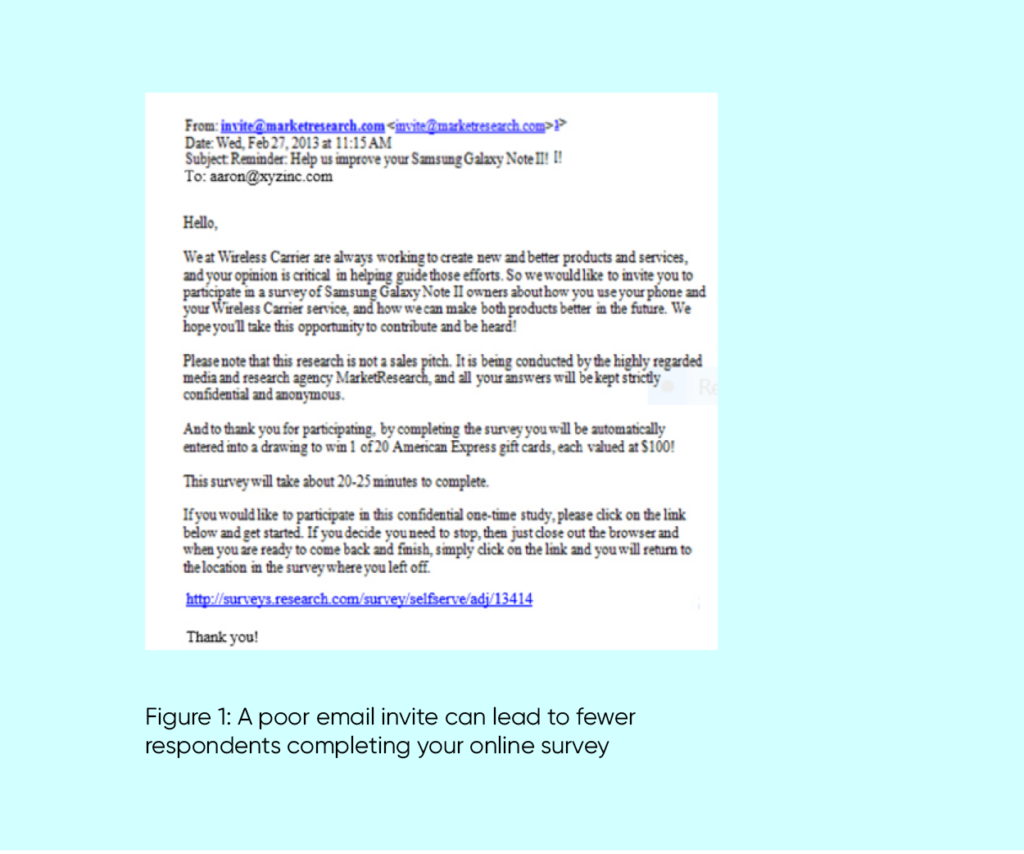

Example 1 – a poorly designed invite

Figure 1 is a screenshot of an email invite from a survey that had poor response rates. Email open rates and click-through rates were also poor, indicating a problem with the invite. This is not a very ‘inviting’ invitation to a survey, being visually not engaging and the copy is generic and wordy.No attempt is made to establish a connection with the reader. There are no personalized elements, and a generic name “Wireless Carrier” is used to introduce the sponsor of the survey. Furthermore, the survey URL does not show any indication of leading to a trustworthy website. One might reasonably be suspicious of it, leading to poor survey participation.

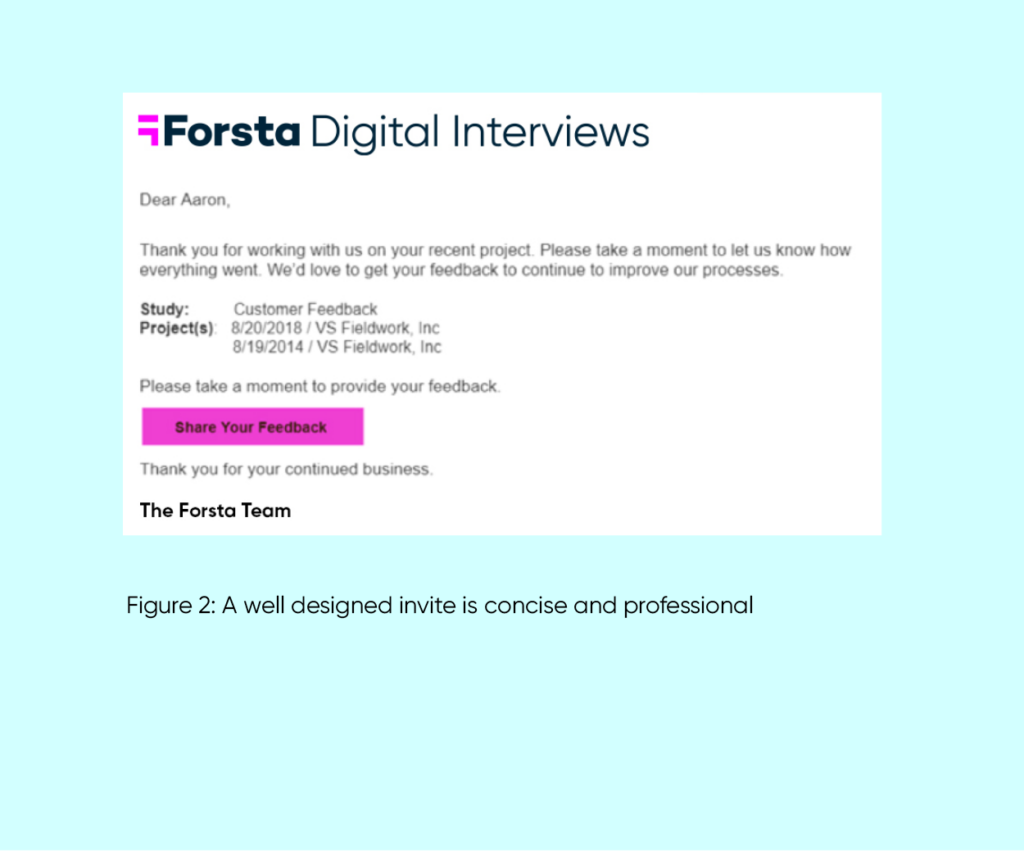

Example 2 – strive for authenticity

Here’s an example of an email invite that Forsta uses to survey customers for feedback (Figure 2). This invite includes a number of elements that help encourage survey participation. In the email header, using names that the recipient recognizes works well. In our case, we use “Forsta,” which references a product they recently purchased. We also keep the subject line short and descriptive. As a general rule, the subject line just needs to convey what the email is about. No need to get fancy. The email invite is also branded and the copy is friendly and concise. It includes a personalized salutation and a call to action; We explain the purpose of the online survey, and most importantly, inform the participant why it is beneficial to them. We also list the projects we want them to evaluate us on. Taken together, the email invite is designed as an authentic and sincere request for the recipient’s time

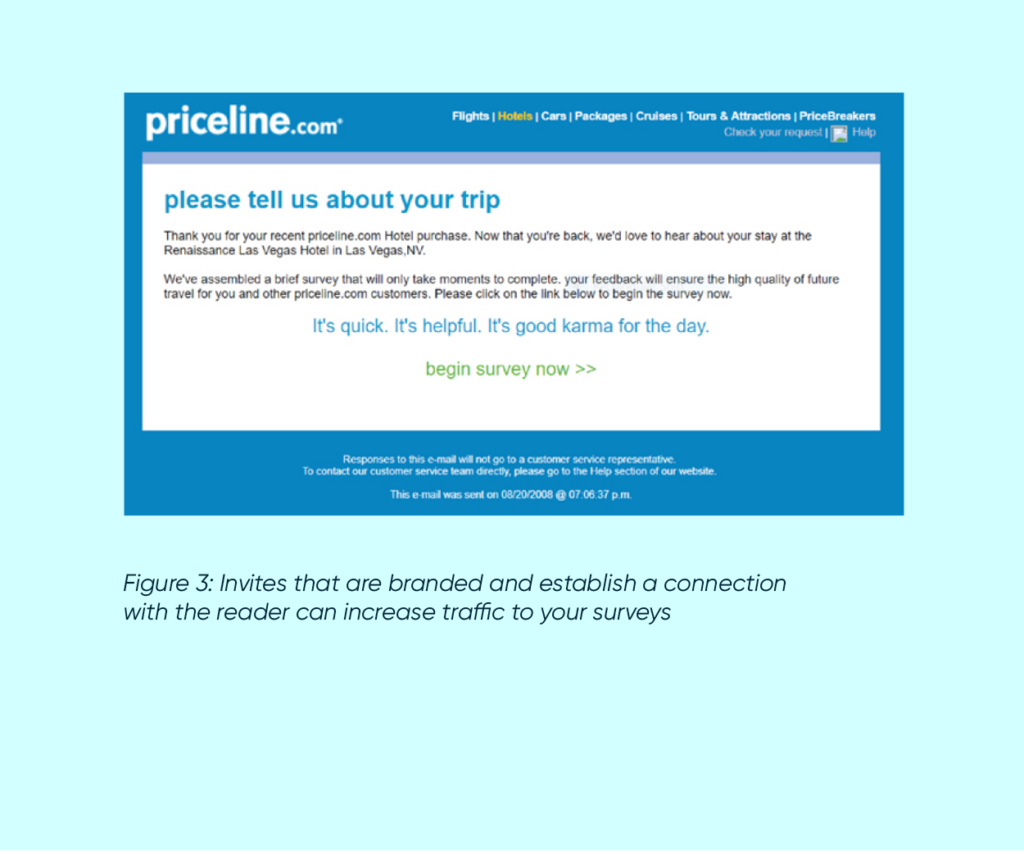

Example 3 – be personal and concise

Here’s another example of a survey invite from priceline.com that was sent to a customer after a recent trip (Figure 3). Many of the elements discussed in the previous example are also present here:

- Use of corporate logo, fonts, and colors clearly establish this email is from priceline.com

- Reference to recipient’s trip experience further validates his connection to priceline.com

- The email uses personal and concise language. The tagline reflects priceline.com brand voice; the copy states the purpose of the survey and why it benefits the recipient

- Email header is branded; subject line is clear and descriptive

Often overlooked, the survey invite is one of the most important components of a research study. It is the hook to your online survey. The best survey in the world will not matter at all without a properly constructed invite to get people there. But a well-crafted one can engage and drive traffic to your survey and convert a passive reader into an active consumer that wants to share their opinions and feedback with you.

Reminders

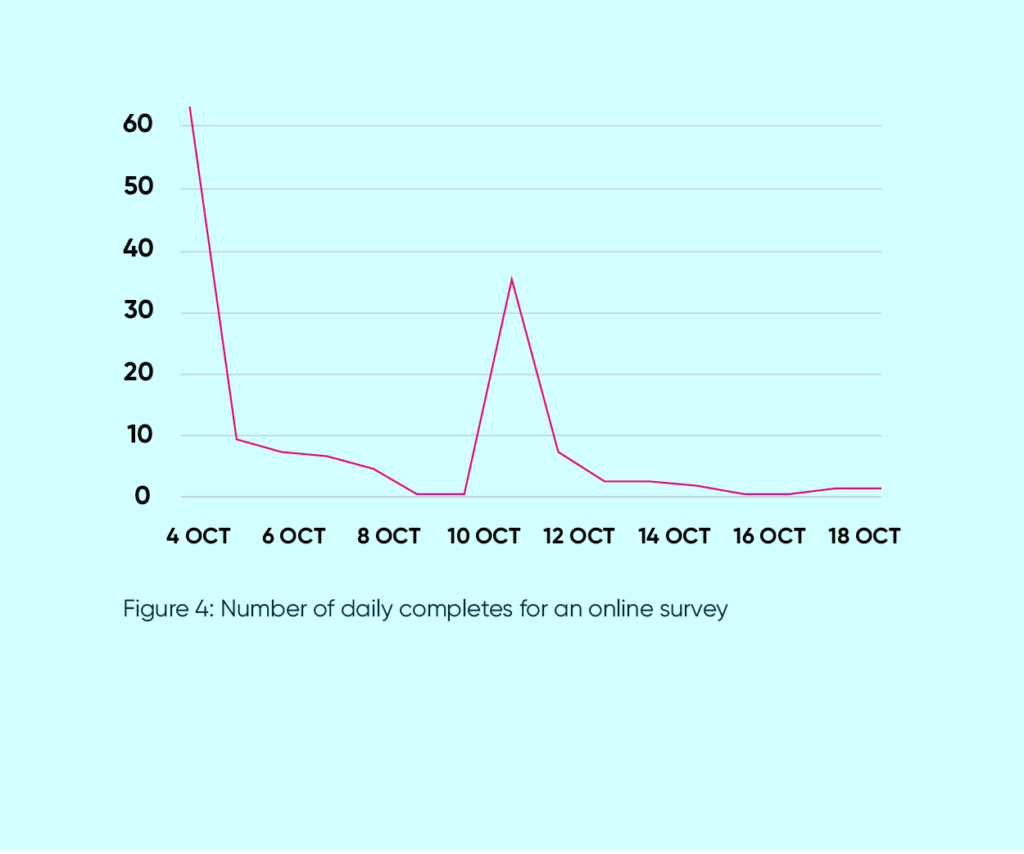

Reminders are an easy and effective way to increase response rates for online surveys. After you’ve crafted and deployed your initial email invite, a follow-up email should always be sent out to contacts that have not taken the survey yet. This serves as a helpful reminder to people and places your email invite back to the top of their inbox if it was missed the first time around. A simple reminder or two can double the response rates to your survey. The chart in Figure 4 is part of a field report that illustrates the daily number of qualified completes for an online survey. An email campaign for this study launched on October 4, resulting in a rush of completed interviews. A reminder was sent out on October 11, which further increased the number of completes.

This pattern is typical of most studies. Almost all the respondents that engage with a survey will do so within the first three days of receiving an email invite or reminder. If a respondent doesn’t fill out your survey after the initial invite, the reminder email is the second chance opportunity to have them do so.

Sending reminder emails can help improve typical survey response rates

Reminders are typically sent 3-7 days after the initial email invite. The exact timing depends on how quickly the results are needed and how soon the number of additional completes begins to stall. While in the above example, reminders were sent on October 11, we certainly could have sent reminders on October 8 or 9. By then, the number of new completes had diminished.

Do not wait more than a week to send a reminder. Waiting too long to send a reminder is detrimental to overall response rates. Reminders are for people that are on the fence or intend to take your survey but haven’t had the chance to. Moving the survey invite back to the top of their inbox through a reminder is helpful to these recipients. But waiting too long results in a loss of interest for your survey.

How many reminders to send

If additional completes are needed, a second reminder can be sent about a week after the first reminder. Careful consideration should be made when sending additional reminders beyond that. More than three isn’t recommended. While each subsequent reminder increases response rates, each reminder achieves diminishing returns, and too many reminders will annoy the email recipient. You will get spam complaints. This is especially important to keep in mind with branded sends because spamming leads to negative perceptions of the brand. As a rule of thumb, you can project that the first reminder will pick up about half the number of new completes compared to what was achieved by the initial send. Each subsequent reminder will then achieve half the number of completes as the previous one.

Content of the reminders

Provided that you have written a proper invite, it is effective to simply resend an exact copy of this initial invitation, and use that as a reminder. You may choose to include the word “Reminder” in your subject line or use a new subject line altogether. Reminders are an important and simple way to boost response rates for online surveys. Be sure to include them as part of your overall research and fielding plan.

Incentives

There may be situations where you anticipate difficulty recruiting respondents to your online survey and simply putting together a well crafted invite or email reminders may not be sufficient. In such cases, consider an incentive. Incentives can come in many forms, but this is essentially a reward provided in exchange for completing a survey. A well-placed incentive can help improve your online survey response rates to get the feedback and insights you seek.

Different types of incentives

Generally, there are two different types of incentives: monetary and non-monetary. A monetary incentive can include gift cards, cash, prepaid credit cards, coupons, etc. A non-monetary incentive can be many things such as access to an industry report, a vacation giveaway, or a sweepstakes to win an iPad. When considering what type of incentive to use in your online survey, think about what the cost will be to you; and consider what kind of incentive your survey takers value. A business person may value access to industry data; or if your survey is recruiting feedback from sports consumers, an autographed basketball or tickets to an event may serve as a highly valued incentive. It pays to be creative since a monetary reward isn’t always the way to go.

When to use an incentive

Not all online surveys need an incentive. Many things impact why people fill out surveys, and an incentive is appropriate if you anticipate difficulties achieving the desired number of completed interviews. You may be targeting an audience that is rare or hard to reach. For example, if you want to survey executives at Fortune 1000 companies, this will be a challenging target to recruit and you will need to highly incentivize them. Other indicators may suggest a potential for low response rates to your survey.

If your survey is long or includes a difficult task (e.g. ask people to keep a log of their TV viewing), you may need to include an incentive.

Topic salience (respondent’s interest in the survey topic) and survey sponsor (the strength of relationship the respondent has to the survey sponsor) are also very strong drivers of participation rates to online surveys. If you expect your online survey to pose recruiting challenges an incentive should be considered.

There are a few things to keep in mind when using an incentive and these could be areas of concern if data collection consistency is important to your research (e.g. tracking). An incentive will engender positive feelings towards your survey. This not only has the effect of encouraging participation, but also positive survey feedback. Studies have shown that a survey respondent will give higher product ratings or express slightly higher satisfaction when given a monetary incentive vs not. As well, incentives can attract respondents that care more about the prize than your survey. Another concern may be that incentives can bias the demographic sample of a survey, but studies haven’t conclusively supported this notion (2).

Whatever the case may be, data and sample quality checks are a routine part of survey research, especially so when an incentive is involved. Incentives can be a valuable tool for improving survey participation, but it would be inappropriate to compare data from one study that used incentives with one that did not.

How much incentive should I offer?

Five dollars is a good starting point for an incentive on a consumer survey; while $10 would be the starting point for a B2B study. Your incentive may be more or less depending on the particulars of your survey and audience. Obviously, the larger the incentive amount you offer, the higher the survey participants will be. However, this relationship is not linear. Your incentive must be at a certain threshold before it can have a significant impact; as well, diminishing returns can occur as the incentives amount you offer gets higher.

Research testing cash incentives in one-dollar increments, ranging from 0-$10 discovered the following (3): The more cash they offered, the better response rate to their survey.

However, there was little difference between any of the $0-$4 cash incentive conditions.

Not until they offered $5-$8, was there a significant improvement over the lesser amounts. Interestingly, little difference was noted between any of the $5-$8 amounts.

The $10 condition showed the highest response rate at 26%.

What is the impact of an incentive?

It’s impossible to predict with certainty how much your incentive will boost survey participation. There are many factors that affect survey response rates. Poor survey wording, long grids, a boring survey topic, or even asking too many sensitive questions may hamper your efforts to field a survey. Different kinds of incentives and the type of audience target are also going to matter.

For this reason also, published experiments assessing the impact of incentives have varied widely. For example, one study found an incentive increased survey completion rates by an average of 4.2% over no incentives (4). They also did not see a significant difference between various ways the incentives were issued. This included lottery or guaranteed incentives; cash or non-cash incentives. A more recent review found an 18% boost in survey participation when offering $10 incentives (5); while our own work with a $2 incentive improved survey participation in a consumer survey by just under 10% (6). We also found no difference between a small guaranteed incentive and a bigger sweepstakes payout as long as the expected value was the same (e.g. 1 in 500 chance to win $1000 was the same as $2 guaranteed). Overall, incentives can have a positive impact and draw people to your survey, whether it be a monetary reward or non-monetary reward. When using incentives, it’s important to keep in mind what your target audience values. For some, it may be cash, for others, access to information or an exclusive event are strong motivators.

Survey usability

Survey usability plays a significant role in dropout rates. Every researcher should test and take their own survey. Often, we can intuitively pinpoint what will encourage a respondent to complete or drop out of a survey. Experimental evidence is not needed to tell us where questions are confusing, text is too wordy, or the task feels tedious. If the survey does not render properly or look good to us, it will probably not look good to the respondent, either

Interactive questions

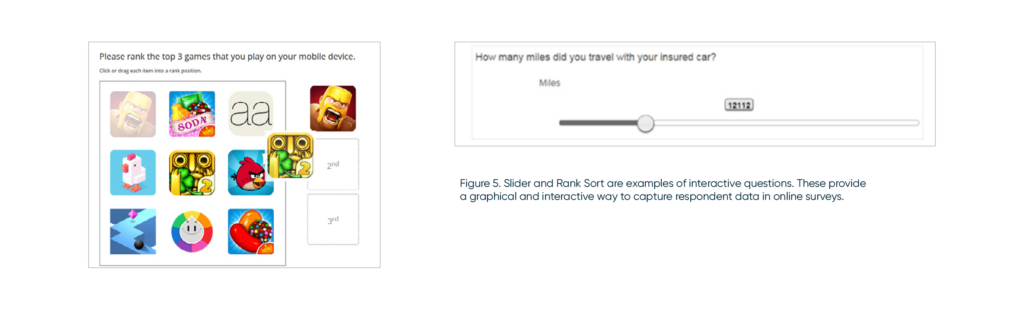

A well-designed survey is important for engaging with a respondent and providing a user-friendly survey taking experience. A survey that is confusing or tedious will cause people to dropout or, abandon your survey, which will ultimately diminish your response rates. Interactive questions are one way to enhance your survey design to provide a more engaging and user-friendly survey experience. In today’s digital world, consumers have many choices for online entertainment, information and news. Successful digital content must therefore stand out from all the clutter. Interactive questions can be a helpful way to engage customers while providing their feedback.

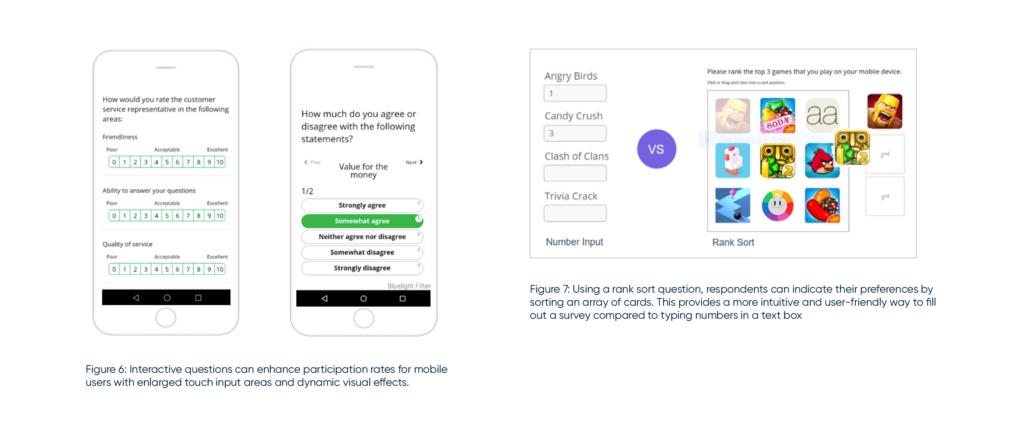

Interactive question types provide a graphical and interactive way to capture respondent data in online surveys. Compared to standard input forms, like radio buttons and checkboxes, interactive questions leverage the technology advances of HTML5 and Javascript. This allows us to build customized and flexible survey forms and create an interactive, more user-friendly survey environment. Given all the internet technologies in use today, consumers expect a dynamic and engaging experience while online. Interactive questions are also very important in today’s mobile world. As an example, traditional radio button designs may be too small for mobile device screens. Figure 6 illustrates how using an interactive ‘card sort’ employs big buttons to provide a more mobile-friendly format over standard grid formatted question types. The card sort question enlarges the input area font sizes for easy touch tapping and clear reading on the smaller screen. Styled buttons can animate, creating the illusion of being depressed. This provides interactive user feedback and an exciting visual effect.

Interactive questions can also reduce the cognitive burden associated with response. Figure 7 shows a rank order task. A traditional question type would have respondents rank order their preferences by entering a rank number in a text box. Using an interactive drag and drop task, respondents instead physically rank order a set of cards, which is a much more intuitive and user-friendly way to fill out a survey. Interactive questions such as these have been shown to improve survey participation and engagement. While there are many benefits to interactive questions, they do need to be implemented with due diligence. Poorly executed designs can increase dropout rates, reduce the tendency to read questions, and even cause respondent confusion when not executed properly. As an example, we have seen designs where a respondent was navigating a spaceship and had to “shoot” an item to indicate a response. In this instance, the resulting data was sporadic as respondents focused more attention on the act of completing the task rather than thinking about the question asked by the researcher. In addition, poor styling or color choices can lead to data biases if attractive visual elements draw the respondent attention to specific answer choices over another.

Consider these five guidelines when employing interactive questions so you can create engaging surveys and minimize your dropout rates…

- Offer a clear benefit

Standard question types, such as radio buttons or checkboxes are familiar forms that continue to be used today. If you’re thinking about using an interactive question, be sure it provides a clear benefit. How will it improve survey participation or usability? Will it enhance the insights you can collect from your customer? Don’t just use the interactive questions to ‘jazz’ up the survey with something that feels a bit more fun. Remember, interactive questions are new forms that a survey respondent may not be familiar with. Your interactive question may require a few more clicks or ask the respondent to manipulate an object in space. Make sure there is a benefit to throwing something different or a task that may take a little more time to complete. - Be clear on what to do and how to answer the question

Since the respondent may be encountering a new question format for the first time, any task that requires reading instructions or additional learning places extra demand on respondents. Ideally, it should be immediately apparent how to interact with the dynamic question. Don’t rely on respondents to read instructions. Pre-testing is a good way to ensure good survey design. - Keep colors neutral and uniform in your interactive questions

Using colors as emotional signifiers (for example, red to indicate “stop” or something negative; green as something positive) can introduce an unexpected bias in your survey data as people interpret colors in different ways. We’ve found respondents will avoid answer choices with aggressive or loud background colors. Therefore keep colors uniform and balanced. Use colors to create light / dark contrast. A light background and dark foreground can help scale labels stand out. Or changing the state of a button to a darker color can provide a visual effect and useful feedback to indicate a selected item. - Check for cross-platform compatibility

Respondents can access surveys from a variety of devices and platforms. Does your interactive question function and render properly across all screen sizes? What may work well for a PC device may not be very usable on a mobile phone. Also vice versa: what may work well on a mobile phone may not render well on a PC. - Test and retest your interactive questions

Finally, the only way to ensure a proper interactive question experience for your survey is to test it. See how it works with a mobile phone, tablet, and laptop/desktop devices. Are all questions and answer options easy to read? Is the respondent task clear or confusing? Difficult or easy? If something isn’t right for you, it won’t be suitable for your respondents either. Interactive questions can improve survey usability and participation. By offering additional functionality, such as graphical and interactive displays this allows the researcher to capture information in more engaging and user friendly ways that are not possible in a traditional format.

Question wording

Questionnaire writing is one of the most fundamental parts of survey research. The answers and insights you get from your survey are only as good as the questions you ask. Researchers spend a great deal of time drafting a questionnaire. Countless meetings and reviews may take place on what questions to include and how to word it. Proper question wording is also crucial to the survey respondent experience. Questions needs to be clear and succinct. A poorly worded question may confuse the respondent and fail to accurately capture their opinions. Good question wording can improve survey enjoyment and participation. Here are some tips to keep in mind when writing questions, including basic wording problems to avoid:

Avoid unnecessary wording

Don’t make the respondent read more than they have to. This makes the survey experience more difficult than it needs to be. Tedious surveys lead to lower data quality. Example: Now please think about your role models. It may help to think of individuals that achieved things you admire or mentors you’ve learned from. Use the following scale, indicate which characteristics describe the role models you’ve had in life.

Consider whether scene-setting is necessary for your question. Often times it can be simplified:

What characteristics describe the role models in your life?

Example: Why haven’t you tried Tony’s brand rice before?

- I looked for them but couldn’t find them in my store

- I had a friend / family member tell me that

- I should not buy that brand

- It didn’t seem appealing to me based on what I know about the brand

Each of these answers options can be shortened:

- I couldn’t find them in my store

- The brand doesn’t appeal to me

- A friend / family member advised against it

Avoid leading the respondent

These are phrases that encourage respondents to answer a certain way. This leads to poor data quality since you’ve biased the respondent answers. Strive instead to be neutral.

Example: Do you believe education is important for the future health of the country?

The way this question is asked leads to only one response. This only logical answer is to agree. Instead, you may ask:

What do you see as the most important issue facing the future health of the country?

- Education

- Healthcare

- The Budget Deficit

- Other

This forces respondents to prioritize what’s important to them and prevents a “want it all” response. Questions that have only one logical answer are usually best rephrased as a trade-off among competing choices.

Example: The Toyota Camry is the best selling car in America. Would you consider buying one?

People will tend to agree with what is popular or socially acceptable. Stating that the Camry is the best selling car in America encourages the respondent to affirm the question. Instead, use more neutral wording, and ask:

Would you consider purchasing a Toyota Camry?

Seek to be understood

Avoid using technical jargon or “marketing speak”. Instead, use everyday language. It helps to be conversational as respondents will find plain language easy to read and understand at a glance. This will lead to a much more enjoyable survey experience.

Example: On a scale of 1 to 5 where 1 means ‘completely dissatisfied’ and 5 means ‘completely satisfied’, how would you rate your hotel experience?

Plain English: How satisfied were you with your hotel experience…?

Example: At which of the following stores or websites did you actually shop for clothing for your most recent purchase?

Plain English: The last time you bought clothes, where did you buy it?

Be careful about using slang terms and vernacular that might not be understood. But do follow conversational norms with your intended audience and this will yield quicker and better quality responses.

Example: Are you against or for Proposition ABC?

Instead: Are you for or against Proposition ABC?

Be sensitive

Respondents will drop out of a survey if they encounter a question that asks for sensitive information. In such situations, avoid direct questioning or asking for specifics. You can also make the question optional or provide an opt-out answer like “prefer not to answer”.

Example: What is your position towards the LGBTQ community?

- I strongly support

- I somewhat support

- Neutral

- I’m somewhat against

- I’m strongly against

Respondents are likely to avoid giving socially unacceptable answers. A less extreme set of answer choices would be more suitable in this case:

- I strongly identify with / advocate for

- I identify with / advocate for

- I don’t identify with / advocate for

- Not sure

Example: What is your annual income?

Asking directly for someone’s income may lead to dropout. This is a sensitive topic. Instead ask:

Which of the following categories best describes your annual income?

- Less than $15,000

- $15,000 to $24,999

- $25,000 to $34,999

- $35,000 to $49,999

- $50,000 to $74,999

- $75,000 to $99,999

- $100,000 to $124,999

- $125,000 to $149,999

- $150,000 or more

- I prefer not to answer

Stay consistent

Nothing is more confusing than seeing a rating scale formatted or written one way, then using a completely different structure for another question. Here are some areas to watch out for:

- Rating scale consistency – make sure the positive and negative ends of the scale are aligned in the same direction throughout

- Keep scale labels consistent where it makes sense

- If presenting the same answer options across multiple questions; keep the row order the same Keep font and styling consistent

- Keep any visuals (e.g. brand logos) consistent throughout Respondents will spend the most time in a survey reading your questions and answer options. It pays, then for you, the researcher to spend the most time considering how these are written. You not only want to capture accurate data, but also provide a clear and friendly survey experience for your respondents. Confusing and long-winded questions distract from getting the insights you want, and also turn the respondents off from your survey. Seek to be understood, use simple and concise language, and strive for a smooth, frustration-free survey.

Better surveys means better insights

Invites, reminders, incentives and ensuring survey usability are all routine parts of conducting survey research. Improving response rates is often about executing and presenting well to our respondents. Although the purpose of a survey may be to help address and investigate key business questions, consider the purpose and motivation the respondent has for completing your survey. They may do so for financial reasons; or for an altruistic reason – they may want to help you or perhaps they feel their voice can have an impact and you will value their opinion. Surveys are a touchpoint for you to engage in dialogue with customers and create a positive brand experience. Therefore, a smooth and engaging survey experience, from professionally branded invites to user-friendly questions doesn’t just maximize survey response rates, but better surveys mean better insights and a more positive customer engagement with your brand.

More customer stories

From vision to reality: How to build a CX program that works

From vision to reality: How to build a CX program that works How do you build a CX program that works?We can’t avoid the elephant in the room. We are all living in verry differnt times. Covid-19 has hit the business industry like nothing else, except perhaps the recession of 2008. And the companies that […]

CX predictions for 2022: In conversation with guest Forrester

CX predictions for 2022: In conversation with guest Forrester What do customers expect from brands and organizations in a post-pandemic environment? How can companies prepare to meet these new demands? Join Forsta’s VP of CX Consulting Chris Brown, and guest speaker, Judy Weader, a Senior Analyst with Forrester, as they discuss this year’s top predictions […]

B2B program accelerators

B2B program accelerators For over 20 years, Forsta has been working with B2B organizations, across a wide spectrum of industries, honing our tools to master the complexities faced by companies like yours. Forsta has been recognized year after year by industry experts for our knowledge, experience, and powerful approach to delivering B2B insights programs that […]

Learn more about our industry leading platform

FORSTA NEWSLETTER

Get industry insights that matter,

delivered direct to your inbox

We collect this information to send you free content, offers, and product updates. Visit our recently updated privacy policy for details on how we protect and manage your submitted data.